1. Loomings

You will be aware, by now, of the cresting wave of excitement about generative AI. “Artificial intelligence”, in general terms, means any computer program which displays humanlike abilities such as learning, creativity, and reasoning,* while “generative AI” applies to AI systems that can turn textual prompts into works of — well, not art, at any rate, but maybe “content” better captures the quality and quantity of the resultant material. Generative AI can produce text (in the case of, for example, OpenAI’s ChatGPT), images (Midjourney being the poster child here) or even music (like Suno).

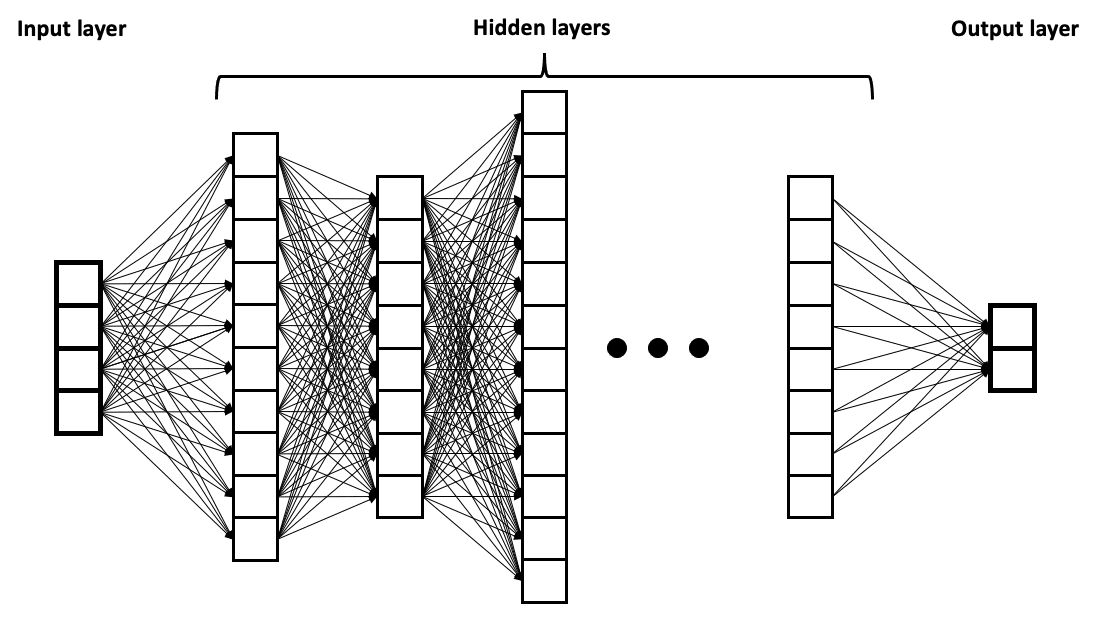

Broadly speaking, generative AI works like this. An layered collection of mathematical functions called an artificial neural network is adjusted from its default state — “trained”, in the parlance — by a succession of sample inputs such as texts or images. Once trained, this network can respond to new inputs by performing (and I am over-simplifying here to a criminal degree) a kind of “autocomplete” operation that creates statistically likely responses informed by its training data. As such, the works created by genAI systems are simultaneously novel and derivative: an individual text or image produced by your favoured AI tool may be new, but such outputs cannot escape the gravity of their corresponding training data. For AI, there truly is nothing new under the sun.†

Despite the mundane underpinnings of most AI tools (“wait, it’s all just data and maths?”), many of them are genuinely good at certain tasks. This is doubly true when a network can be trained on a large enough set of inputs — say, for example, a slice of the billions of publicly-available web pages, a newspaper’s archive, or a well-stocked library. All of the most successful genAI systems have benefitted from opulent training datasets so that, for instance, ChatGPT is an eager, helpful and exceptionally knowledgeable conversationalist. Midjourney and Suno, too, can surprise with their ability to create pictures and songs that are at least halfway convincing as simulacra of human works. From a certain point of view, and perhaps with a dab of Vaseline on the lens, the promise of AI has already been fulfilled.

2. Pitfalls

Yet many avenues of criticism remain open to the generative AI sceptic. Most of them are valid, too.

To start at the beginning, training is a slow and intensive process. A neural network, or “model”, for text generation might have to consume billions of pieces of text to arrive at a usable state. Meta’s “Llama 3” system, for instance, which the company has made publicly available, was trained on 15 trillion unique fragments of text.3 Llama 3.2 comes in different flavours, but its largest version, the so-called “405B” model, contains more than 400 billion individual mathematical parameters.4‡ One paper estimates that OpenAI have spent at least $40 million training the model behind ChatGPT, and Google has spent $30 million on Gemini, while training of bigger models in the future may run to billions.6

Making use of those trained models is expensive, too. An OECD report on the subject says that a single AI server can consume more energy than a family home, and guzzles water into the bargain: a single conversation with ChatGPT is thought to use up around 500ml of water for power generation and cooling alone.7 When you converse with an AI model, you might as well have poured a glass of water down the drain.

GenAI’s thirst for training data begets another problem: those enormous training datasets often contain copyrighted works that have been used without their owners’ permission.§ The New York Times and other papers are currently suing Microsoft and OpenAI for copyright infringement, to pick only one prominent example.8 AI companies protest that simply training a model on a copyrighted work cannot infringe its copyright, since the work does not live on as a direct copy in the trained model — except that researchers working for those very same AI companies have debunked that assertion. Employees of Google and DeepMind, among others, have successfully tricked image-generation tools into reproducing some of the images on which they were trained,9 and it isn’t very hard to convince AI tools to blantantly infringe on copyrighted works.

This leads to a related criticism. For the likes of Google to have been ignorant of the training data hiding in their AI models is a sign that it is, at a fundamental level, really difficult to comprehend what is going on inside these things.10 The basic operating principles of a given model will be understood by its developers, but once that model’s billions of numerical parameters have been massaged and tweaked by the passage of trillions of training inputs, there is too much data in play for anyone to truly understand how it is all being used. In my day job I work with medical AI tools, and the doctors who use them worry acutely about this issue. The computer can tell them that a patient may have cancer, but it cannot tell them why it thinks that. The “explainability” of AI still has a long way to go.

Then there are the hallucinations. This evocative term came out of computer vision work in the early 2000s, where it referred to the ability of AI tools to add missing details to images. Then, hallucination was a good thing, since this was what these tools were trying to accomplish in the first place. More recently, however, “hallucination” has come to mean the way that genAI will sometimes exhibit unpredictable behaviour when faced with otherwise reasonable tasks.11 Midjourney and other image generators were, for a long time, prone to giving people extra fingers or limbs.12 An AI tool trialled by McDonald’s put bacon on top of ice cream and mistakenly ordered hundreds of chicken nuggets.13 OpenAI’s speech-to-text system, Whisper, fabricated patients’ medical histories and medications in an alarming number of cases.14 True, these are not hallucinations in the human sense of the word. It’s more accurate to call them ghosts in the probabalistic machine — paths taken through the neural net which reveal that mathematical sense does not always equate to common sense. But whatever they are called, the results can be startling at best and dangerous at worst.

There are also philosophical objections. At issue is this: humans, it turns out, want to work. And not only to work, but to do good, useful work. Back in the nineteenth century, the Luddites worried about skilled workers being dispossessed by steam power.15 The proponents of the fin de siècle Arts and Crafts movement, too, epitomised by the English poet and textile designer William Morris,|| agitated for a world where people made things with their own two hands rather than submit to the stultifying, repetitive labour of the production line. Whether for economic or spiritual reasons, both recognised the value of human craft.

More recently, the anarchist and anthropologist David Graeber expressed similar sentiments in a widely-read 2013 essay, “On the Phenomenon of Bullshit Jobs”. In it, he notes that the twentieth century’s relentless increases in productivity have gifted us not fewer working hours or a boom in human happiness but rather a proliferation of low-paid, aimless jobs, in which workers twiddle their thumbs in make-work roles of whose pointlessness they are acutely aware.16

Neither nor David Graeber nor his historical counterparts were complaining about AI, but the point stands. If corporations view genAI as a means to replace expensive human workers (and to be clear, that is exactly how they see it,17 just like mill owners and car manufacturers before them), then we are in for yet more “bullshit jobs”.

None of these complaints should be all that surprising to us. All computers eat energy and data and turn them into heat and information, so if we force-feed them with gigantic quantities of the former then we will get commensurately more of the latter. And if history has taught us anything, it is that technlogies are tools rather than panaceas, and that work-free utopias are far less durable than, say, exploitative labour laws and unequal distributions of wealth.

3. The bomb pulse

To backtrack to an earlier and more optimistic point, in many cases AI tools can be remarkably good at their jobs. A friend of mine likened the large language models that underpin most text-based generative genAI systems to an intern who has read the entire internet: they will not always make the same deduction or inference that you would have done, but their breadth of knowledge is astounding. It is hard to argue that we have built something incredible here. If we can stomach the cost in power and in water, then genAI promises to not only solve problems for us, but to do so at a ferocious rate — and here is where a new and interesting class of AI problems arise. To see why, let me take you back to the heyday of another morally ambiguous technological marvel.

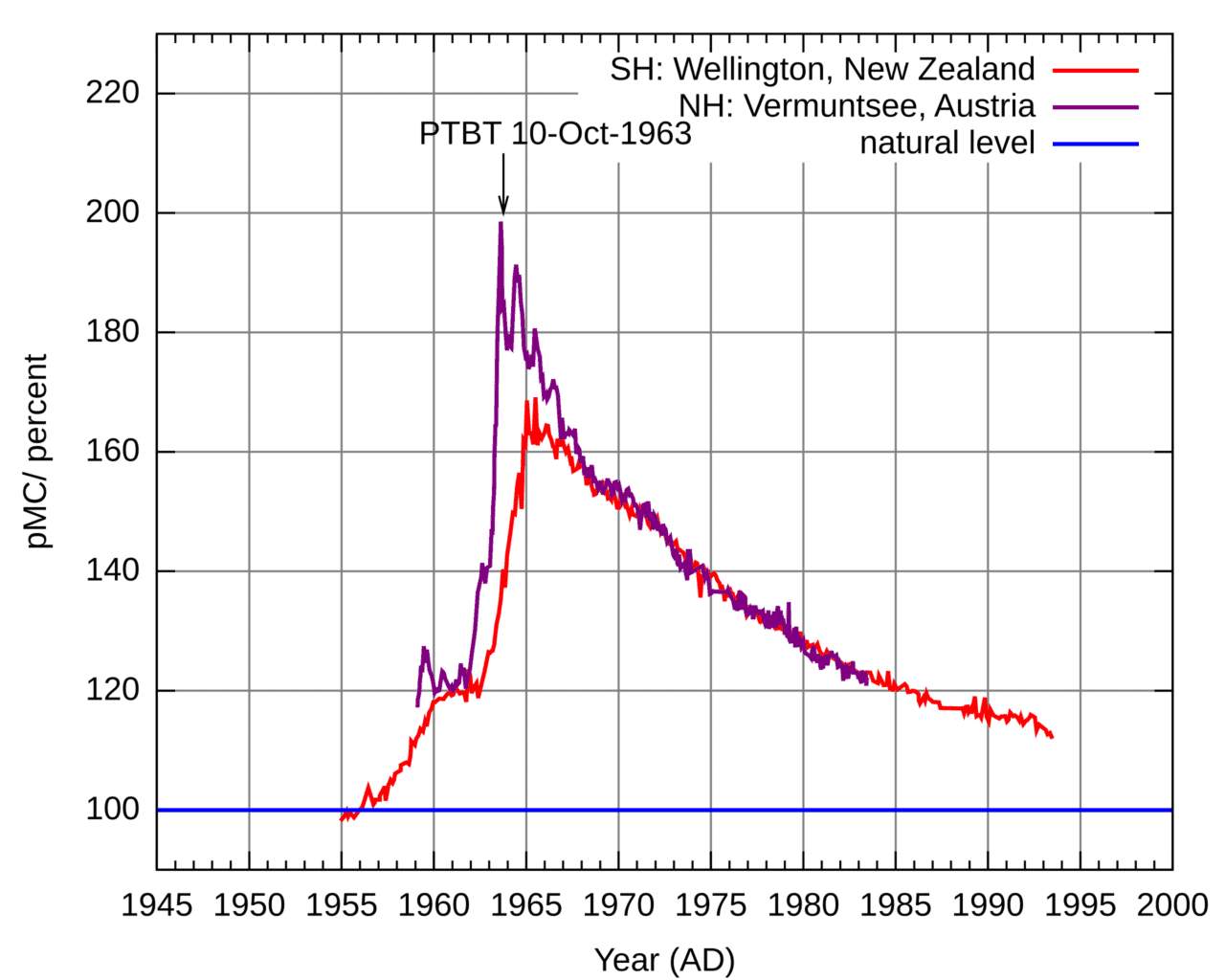

Nuclear weapons testing, where the likes of the USSR, USA and UK exploded hundreds of atomic bombs in the air and underground, reached a peak in 1961-62 after which a test ban treaty led to a dramatic slowdown.18 But the spike in tests before the ban, which contaminated Pacific atolls and Kazakh steppe alike, sent vast clouds of irradiated particles into the atmosphere that would leave a lasting mark on every living thing on earth.19,20

That mark was the work of a massive rise in the amount of atmospheric carbon-14, a gently radioactive isotope of carbon that filters into human and animal food chains through photosynthesis and the exchange of carbon dioxide between the air and the ocean. Carbon-14 is essential to radiocarbon dating, because it allows researchers to match the amount of carbon-14 in a material (taking into account the rate at which it decays into plain old carbon-12) with a calibration curve that shows how much carbon-14 was floating around in earth’s biosphere at any particular point in the past.21 It is a powerful and useful tool, with everyone from archaeologists to forensic criminologists using it to determine the age of organic matter.

The so-called bomb pulse of carbon-14, then, which was measured and quantified to the minutest detail, made the calibration curve much, much more accurate. It became possible to date just about every living organism born after 1965 to an accuracy of just a few years — a dramatic improvement on the pre-atomic testing era, when carbon-14 concentrations ebbed and flowed across centuries and millenia in a confusing and sometimes contradictory manner.22 If a mushroom cloud could ever be said to have had a silver lining, it was the bomb pulse.

Just as nuclear testing pushed carbon-14 through the roof, generative AI is dramatically increasing the quantity of artifically-generated texts and images in circulation on the web. For instance, a recent study found that almost half of all new posts on Medium, the blogging platform, may have been created using genAI.23 Another report estimated that billions of AI-generated pictures are now being created every year.24 We are living through a bomb pulse of AI content.

Unlike its nuclear counterpart, however, the explosion of AI content is making it harder, not easier, to make observations about our world. Because AI apps are incapable of meaningfully transcending their training data, and because a non-trivial proportion of AI outputs can be objectively quantified as gibberish, the rise in AI-generated material is polluting our online data rather than enriching it.

To wit: Robyn Speer, the maintainer of a programming tool that looks up word frequencies in different languages, mothballed his project in September 2024 because, he wrote, “the Web at large is full of slop generated by large language models, written by no one to communicate nothing.”25 Elsewhere, Philip Shapira, a professor at Manchester University in the UK, connected an inexplicable rise in the popularity of the word “delve” with ChatGPT’s tendency to overuse that same word.26,27 There is already enough AI “slop” out there to distort online language.

Indeed, there is now so much publicly available AI-generated data that training datasets are being contaminated by synthetic content. And if that feedback loop occurs too often — if AI models are repeatedly trained on their own outputs, or those from other models — then there is a risk that those models will eventually “collapse”, losing the breadth and depth of knowledge that makes them so powerful. “Model Autophagy Disorder”, as this theoretical pathology is called, is mad cow disease for AI.28 Where herds of cattle were felled by malformed proteins, AI models may be felled by malformed information.

4. Burning books

And so, if I may be permitted (encouraged, even) to bring things to a point, I will submit as concluding evidence a story published by the tech news outlet 404 Media. Emanuel Maiberg’s piece, “Google Books Is Indexing AI-Generated Garbage” reports that, well, Google Books appears to be indexing AI-generated garbage. (Never change, SEO.) Searching for the words “as of my last knowledge update” — a tell, like “delve”, of OpenAI’s ChatGPT service — Maiberg uncovered a number of books bearing ChatGPT’s fingerprints.29 That was in April this year, and Maiberg noted with cautious optimism that the AI books were mostly hidden farther down Google’s search results. The top results, he said, were mostly books about AI.

In writing this piece I tried Maiberg’s experiment for myself. In the six months, give or take, since his article was published, the situation has reversed itself: on the first page of my search results, every entry bar one was partly or wholly the work of ChatGPT. (The lone exception was, as might be hoped, a book on AI.) Most results on the second page also were the products of AI. The genAI bomb pulse would appear to be gathering pace.

I’ll be honest: I had been ambivalent about generative AI until I hit the “🔍” button at Google Books. I’ve tried ChatGPT and Google Gemini as research tools, but despite their breadth of knowledge they sometimes missed details that I felt they should have known. I’m also one of those humans who enjoys doing their own work, so delegating writing or programming tasks to AI has never really been on the cards. If I need help with a problem as I write a book or design a software system, I’ll run a web search or ask a human for help rather than an environmentally-ruinous autocomplete algorithm on steroids. Not everyone has the same qualms, though, or the same needs, and that’s fine. But this AI-powered dilution of Google Books still feels like a step beyond the pale.

For millennia, books were humanity’s greatest information technology.¶ Portable, searchable, self-contained — but more than that, authoritative in some vague but potent way, each one guarded by a phalanx of editors, copyeditors, proofreaders, indexers and designers. Certainly, none of my own books could have come to pass without many other books written before them: I’ve lost count of the facts in them that rely upon some book or another found after a trawl through Google Books, the Internet Archive, or a physical library.

Books have never been perfect, of course. No artefact made by human hands ever could be. They can be banned or burned or ignored. They can go out of date or out of print. They can pander to an editor’s personal whims or a publisher’s commercial constraints, or be axed entirely on a lawyer’s recommendation. Google Books can be criticised too — ironically, it is accused mostly of the same lax approach to copyright as many AI vendors — yet it is an incredibly useful tool, acting as an omniscient meta-index to many of the world’s books. It would take a brave protagonist, I think, to argue that books have not been, on balance, a positive force.

None of this is to criticise AI itself. Costs will decrease as we figure out how to manage larger and larger datasets. Accuracy will increase as we train AI models more smartly and tweak their architectures. Even explainability will improve as we pry open AI models and inspect their internal workings.

Ultimately, the problem lies with us, as it always does. Can we be relied upon to use AI in a responsible way — to avoid poisoning our body of knowledge with the informational equivalent of malformed proteins? To remember that meaningful, creative work is one of the joys of human existence and not some burden to be handed off to a computer? In short, to spend a moment evaluating where AI should be used rather than where it could be used?

What’s left for me at the end of all this is a creeping, somewhat amorphous unease that we are unprepared for the ways in which generative AI will change books and our ability to access them. I don’t think AI will kill books in any real sense, just as ebooks have not killed physical books and Spotify has not killed radio, but unless we can restrain our worst instincts there is a risk that books — and by extension, knowledge — will emerge cheapened and straitened from the widening bomb pulse of generative AI.

- 1.

-

Mirzadeh, Iman, Keivan Alizadeh, Hooman Shahrokhi, Oncel Tuzel, Samy Bengio, and Mehrdad Farajtabar. “GSM-Symbolic: Understanding the Limitations of Mathematical Reasoning in Large Language Models”. arXiv, October 7, 2024. https://doi.org/10.48550/arXiv.2410.05229.

- 2.

-

Doshi, Anil R., and Oliver P. Hauser. “Generative AI Enhances Individual Creativity But Reduces the Collective Diversity of Novel Content”. Science Advances 10, no. 28 (July 12, 2024): eadn5290. https://doi.org/10.1126/sciadv.adn5290.

- 3.

-

Mann, Tobias. “Meta Debuts Third-Generation Llama Large Language Model”. The Register.

- 4.

-

The Latest AI news from Meta. “Llama 3.2: Revolutionizing Edge AI and Vision With Open, Customizable Models”.

- 5.

-

Meta Llama. “Llama 3.2”. Accessed October 25, 2024.

- 6.

-

Cottier, Ben, Robi Rahman, Loredana Fattorini, Nestor Maslej, and David Owen. “The Rising Costs of Training Frontier AI Models”. arXiv, May 31, 2024. https://doi.org/10.48550/arXiv.2405.21015.

- 7.

-

Ren, Shaolei. “How Much Water Does AI Consume? The Public Deserves to Know”. The AI Wonk (blog).

- 8.

-

Robertson, Katie. “8 Daily Newspapers Sue OpenAI and Microsoft Over A.I”. The New York Times, sec. Business.

- 9.

-

Carlini, Nicholas, Jamie Hayes, Milad Nasr, Matthew Jagielski, Vikash Sehwag, Florian Tramèr, Borja Balle, Daphne Ippolito, and Eric Wallace. “Extracting Training Data from Diffusion Models”. arXiv, January 30, 2023. https://doi.org/10.48550/arXiv.2301.13188.

- 10.

-

Burt, Andrew. “The AI Transparency Paradox”. Harvard Business Review.

- 11.

-

Maleki, Negar, Balaji Padmanabhan, and Kaushik Dutta. “AI Hallucinations: A Misnomer Worth Clarifying”. arXiv, January 9, 2024. https://doi.org/10.48550/arXiv.2401.06796.

- 12.

-

Hughes, Alex. “Why AI-Generated Hands Are the Stuff of Nightmares, Explained by a Scientist”. BBC Science Focus.

- 13.

- 14.

-

Koenecke, Allison, Anna Seo Gyeong Choi, Katelyn X. Mei, Hilke Schellmann, and Mona Sloane. “Careless Whisper: Speech-to-Text Hallucination Harms”. arXiv, May 3, 2024. https://doi.org/10.48550/arXiv.2402.08021.

- 15.

-

Magazine, Smithsonian, and Richard Conniff. “What the Luddites Really Fought Against”. Smithsonian Magazine.

- 16.

-

Graeber, David. “On the Phenomenon of Bullshit Jobs”. STRIKE! Magazine.

- 17.

-

Manyika, James, and Kevin Sneader. “AI, Automation, and the Future of Work: Ten Things to Solve for”. McKinsey & Company.

- 18.

-

“Radioactive Fallout”. In Worldwide Effects of Nuclear War.

- 19.

-

Nuclear Museum. “Marshall Islands”. Accessed October 25, 2024.

- 20.

-

Kassenova, Togzhan. “The lasting toll of Semipalatinsk’s nuclear testing”. Bulletin of the Atomic Scientists (blog).

- 21.

-

Hua, Quan. “Radiocarbon Calibration”. Vignette Collection. Accessed October 25, 2024.

- 22.

-

Hua, Quan. “Radiocarbon: A Chronological Tool for the Recent past”. Quaternary Geochronology, Dating the Recent Past, 4, no. 5 (October 1, 2009): 378-390. https://doi.org/10.1016/j.quageo.2009.03.006.

- 23.

-

Knibbs, Kate. “AI Slop Is Flooding Medium”. Wired.

- 24.

-

Everypixel Journal. “AI Image Statistics for 2024: How Much Content Was Created by AI”.

- 25.

-

Speer, Robyn. “Wordfreq/SUNSET.Md”. GitHub.

- 26.

-

Shapira, Philip. “Delving into ‘delve’”. Philip Shapira (blog).

- 27.

-

AI Phrase Finder. “The Most Common ChatGPT Words”.

- 28.

-

Nerlich, Brigitte. “From contamination to collapse: On the trail of a new AI metaphor”. Making Science Public (blog).

- 29.

-

Maiberg, Emanuel. “Google Books Is Indexing AI-Generated Garbage”. 404 Media.

- *

- Although as a new paper demonstrates, generative AI tools are almost certainly not performing any sort of robust logical reasoning. Researchers at Apple found that insignificant changes to input prompts can result in markedly different (and markedly incorrect) responses.1 ↢

- †

- Indeed, the research bears this out. Novels written with the help of AI were found to be better, in some senses, than those without — but they were also markedly more similar to one another.2 ↢

- ‡

- That said, there are many smaller models out there. Llama’s own “1B” model can be run on a smartphone.5 ↢

- §

- I am entirely sure that my writing has been used to train sundry AI models, and if you have ever published anything online or in print, yours likely has been too. ↢

- ||

- Eric Gill, author of An Essay on Typography, was another leading light. ↢

- ¶

- An information technology so influential, one might even consider writing a book about it. ↢

Comment posted by S. Minniear on

Thanks for the analysis.

Comment posted by Keith Houston on

Not at all! It’s an itch I’ve been meaning to scratch for a while. Thanks for reading.

Comment posted by Nancy Gilmartin on

Wow! Thank you for that!

Comment posted by Keith Houston on

Not at all! I hope it made sense, and was useful.

Comment posted by Steve Dunham on

Thank you. Now I think I understand AI (a little). A few thoughts came to mind:

1. Using newspapers as a training source: a news story about an area in which I am knowledgeable usually contains errors. I’ve heard others say this concerning their areas of knowledge, so I would guess that these sources are rife with errors (and, after all, they are only a first draft of history).

2. Lax approach to copyright: at one government contractor where I worked, I overheard a man say, “Copyright? What copyright? Ha, ha!” I have no trouble believing that a similar attitude infects others, and some people at that job did not seem to understand that it was unethical to paste content from Wikipedia, not cite the source, and charge the government for the report (not to mention the unreliability of Wikipedia).

PS: I am not a robot.

Comment posted by Keith Houston on

Hi Steve — training on unreliable data is a potential problem, certainly. I suspect that sporadic inaccuracies are probably smoothed out by the vast bulk of mostly correct data, but widely-held myths or misconceptions may well make it through training intact.

The likelihood that copyrighted works exist in a lot of AI training data really annoys me. It’s not the worst problem with AI by a long shot, but it does seem like AI companies have collectively decided to ask for forgiveness rather than permission. Not cool, in my opinion.

Thanks for the comments!

Comment posted by Steve Dunham on

I saw in the news today that a group of Canadian news publishers is suing OpenAI for using their copyrighted news content to train ChatGPT. I can see both sides: the news publishers own their content, but the AI may not be actually redistributing it and hence not reducing the publishers’ market. I’ll be interested to see what happens.

Comment posted by Keith Houston on

Absolutely — this looks it will be Canada’s test case on the matter.

I think the publishers’ argument will be that the AI can infringe on their copyright, since the AI can regurguitate knowledge gleaned from newspaper articles. The impression I get is that a lot of people already use ChatGPT and the like as a substitution for web searches, and presumably the worry is that it may take over from other media outlets (such as newpapers) in due course.